Well, that is one way of describing it, I guess. Do you remember the incident in March 2018 when a pedestrian was struck and killed by a ‘self-driving’ Uber car?

The driver was seemingly distracted at the time and was apparently relying on the software to drive the car.

However, the software did not understand that people do not always cross roads at marked and designated crossing points. Some people (many people?) cross where they like when they feel it is safe to do so. The programmers never thought of that.

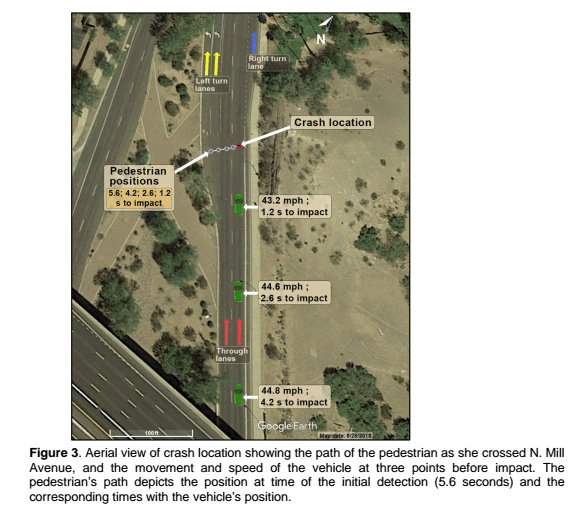

Although the [system] detected the pedestrian nearly six seconds before impact … it never classified her as a pedestrian, because she was crossing at a location without a crosswalk [and] the system design did not include a consideration for jaywalking pedestrians.

NTSB report

The vehicle didn’t calculate that it could potentially collide with the woman until just 1.2 seconds before impact, at which point it was too late to brake.

The report says that the system detected her about 6 seconds before impact, but didn’t classify her as a pedestrian. What did it classify her as, one wonders?

After recognising the pedestrian (too late) the vehicle then wasted a second trying to calculate an alternative path or allowing the driver to take control. Uber has since eliminated this function in a software update. How comforting!

According to reports, prosecutors have absolved Uber of liability but are still weighing the idea of criminal charges against the driver.

Perhaps they should test these cars in New Zealand as no one can be sued here and the ACC will pay all the bills when some other serious software flaw manifests itself.

“Over my dead body!” – did I hear you say?